Covert our documentation links to GH absolute links (#14344)

Signed-off-by: Tasos Katsoulas <tasos@netdata.cloud>

This commit is contained in:

parent

caf18920aa

commit

9f1403de7d

42

README.md

42

README.md

|

|

@ -22,7 +22,7 @@ It gives you the ability to automatically identify processes, collect and store

|

|||

|

||||

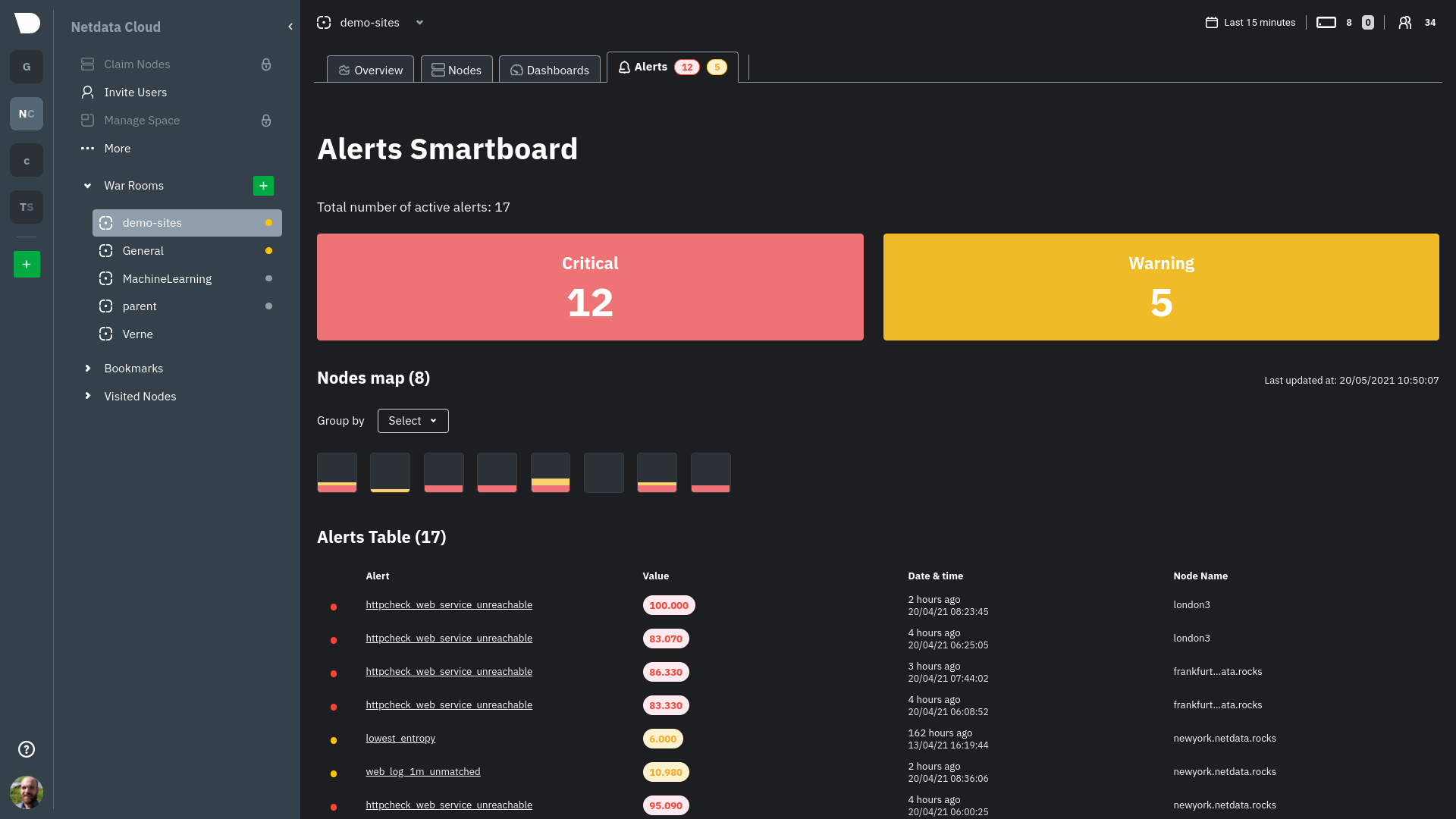

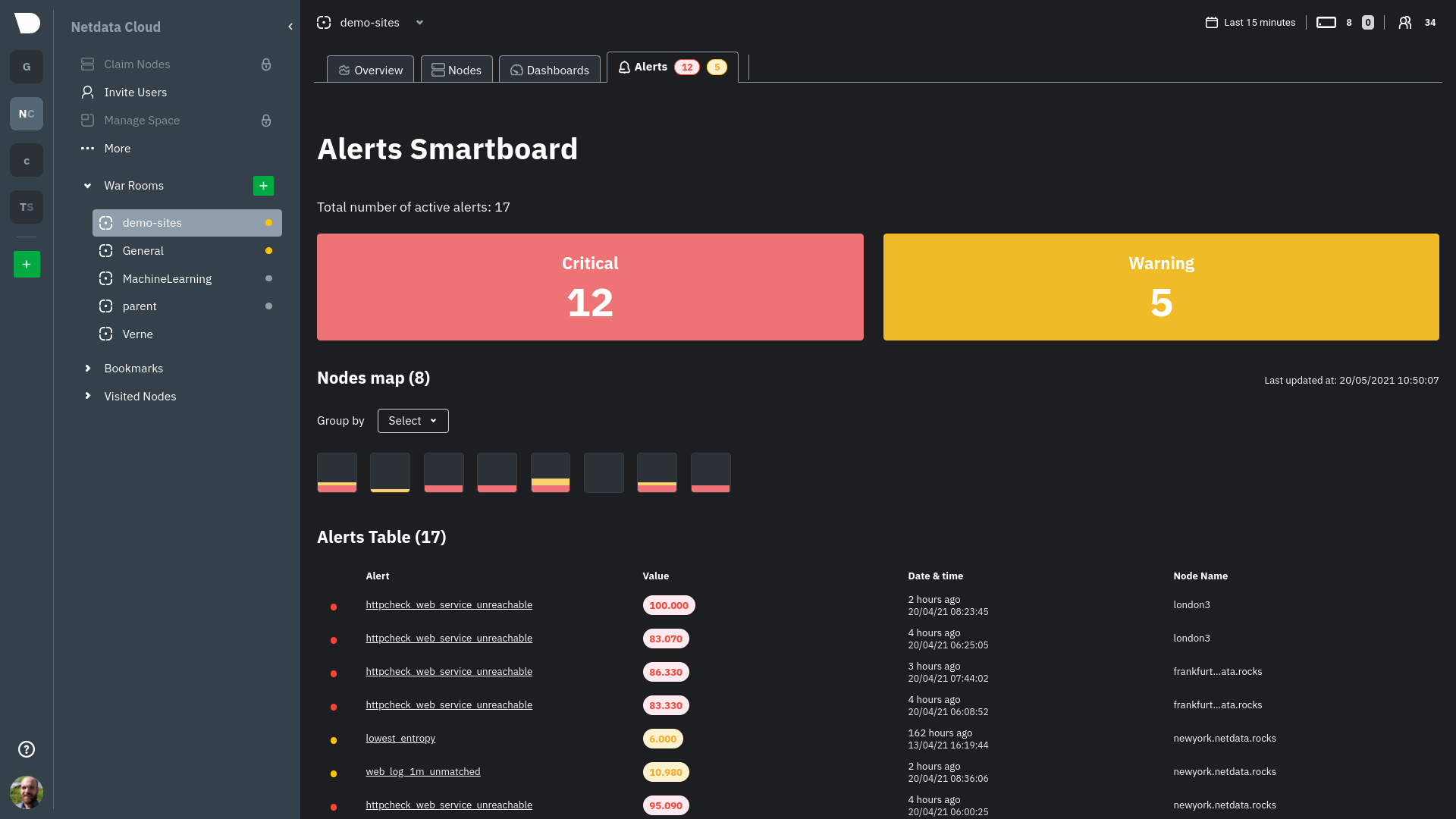

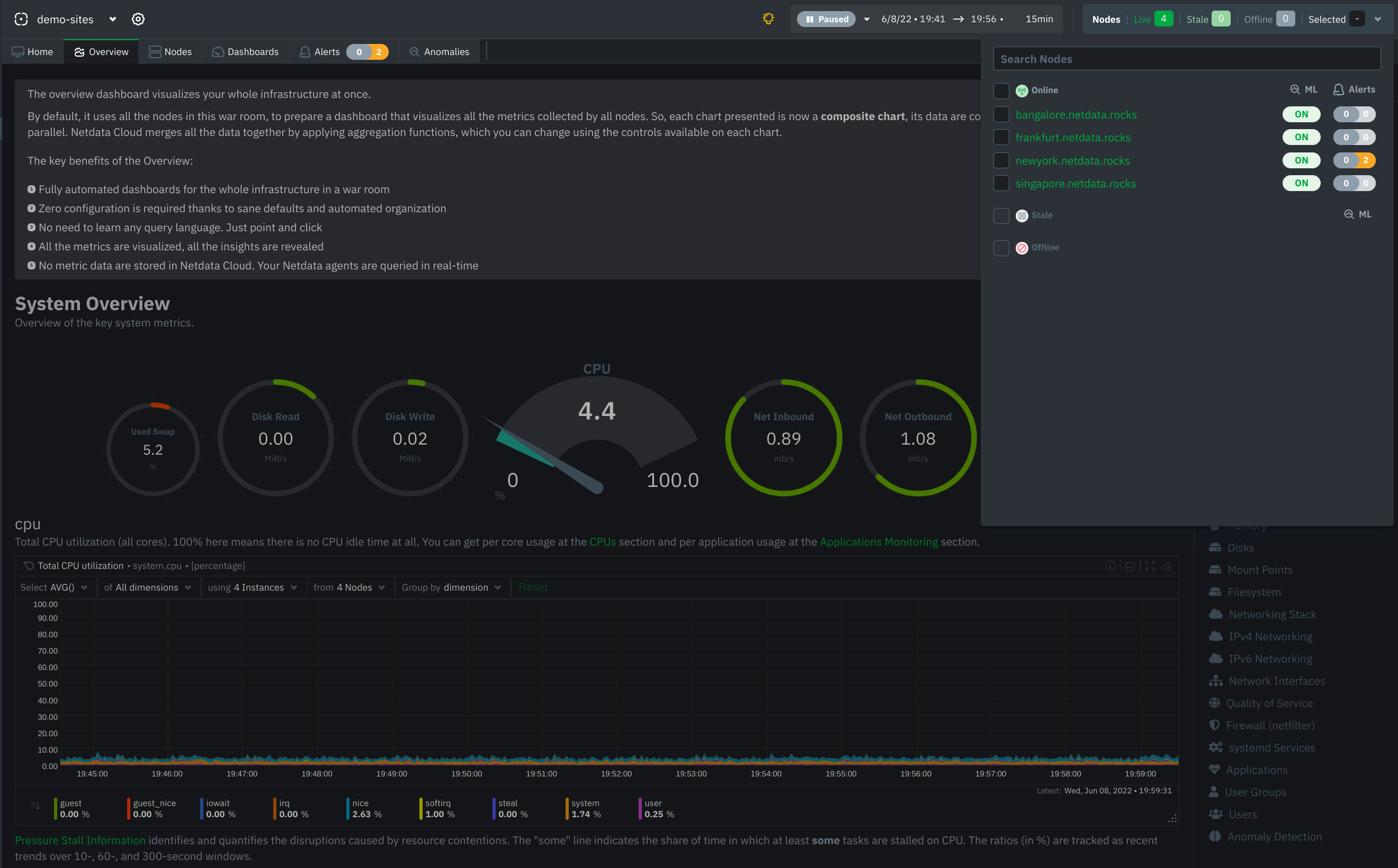

[Netdata Cloud](https://www.netdata.cloud) is a hosted web interface that gives you **Free**, real-time visibility into your **Entire Infrastructure** with secure access to your Netdata Agents. It provides an ability to automatically route your requests to the most relevant agents to display your metrics, based on the stored metadata (Agents topology, what metrics are collected on specific Agents as well as the retention information for each metric).

|

||||

|

||||

It gives you some extra features, like [Metric Correlations](https://learn.netdata.cloud/docs/cloud/insights/metric-correlations), [Anomaly Advisor](https://learn.netdata.cloud/docs/cloud/insights/anomaly-advisor), [anomaly rates on every chart](https://blog.netdata.cloud/anomaly-rate-in-every-chart/) and much more.

|

||||

It gives you some extra features, like [Metric Correlations](https://github.com/netdata/netdata/blob/master/docs/cloud/insights/metric-correlations.md), [Anomaly Advisor](https://github.com/netdata/netdata/blob/master/docs/cloud/insights/anomaly-advisor.mdx), [anomaly rates on every chart](https://blog.netdata.cloud/anomaly-rate-in-every-chart/) and much more.

|

||||

|

||||

Try it for yourself right now by checking out the Netdata Cloud [demo space](https://app.netdata.cloud/spaces/netdata-demo/rooms/all-nodes/overview) (No sign up or login needed).

|

||||

|

||||

|

|

@ -77,7 +77,7 @@ Here's what you can expect from Netdata:

|

|||

synchronize charts as you pan through time, zoom in on anomalies, and more.

|

||||

- **Visual anomaly detection**: Our UI/UX emphasizes the relationships between charts to help you detect the root

|

||||

cause of anomalies.

|

||||

- **Machine learning (ML) features out of the box**: Unsupervised ML-based [anomaly detection](https://learn.netdata.cloud/docs/cloud/insights/anomaly-advisor), every second, every metric, zero-config! [Metric correlations](https://learn.netdata.cloud/docs/cloud/insights/metric-correlations) to help with short-term change detection. And other [additional](https://learn.netdata.cloud/guides/monitor/anomaly-detection) ML-based features to help make your life easier.

|

||||

- **Machine learning (ML) features out of the box**: Unsupervised ML-based [anomaly detection](https://github.com/netdata/netdata/blob/master/docs/cloud/insights/anomaly-advisor.mdx), every second, every metric, zero-config! [Metric correlations](https://github.com/netdata/netdata/blob/master/docs/cloud/insights/metric-correlations.md) to help with short-term change detection. And other [additional](https://github.com/netdata/netdata/blob/master/docs/guides/monitor/anomaly-detection.md) ML-based features to help make your life easier.

|

||||

- **Scales to infinity**: You can install it on all your servers, containers, VMs, and IoT devices. Metrics are not

|

||||

centralized by default, so there is no limit.

|

||||

- **Several operating modes**: Autonomous host monitoring (the default), headless data collector, forwarding proxy,

|

||||

|

|

@ -88,17 +88,17 @@ Netdata works with tons of applications, notifications platforms, and other time

|

|||

|

||||

- **300+ system, container, and application endpoints**: Collectors autodetect metrics from default endpoints and

|

||||

immediately visualize them into meaningful charts designed for troubleshooting. See [everything we

|

||||

support](https://learn.netdata.cloud/docs/agent/collectors/collectors).

|

||||

support](https://github.com/netdata/netdata/blob/master/collectors/COLLECTORS.md).

|

||||

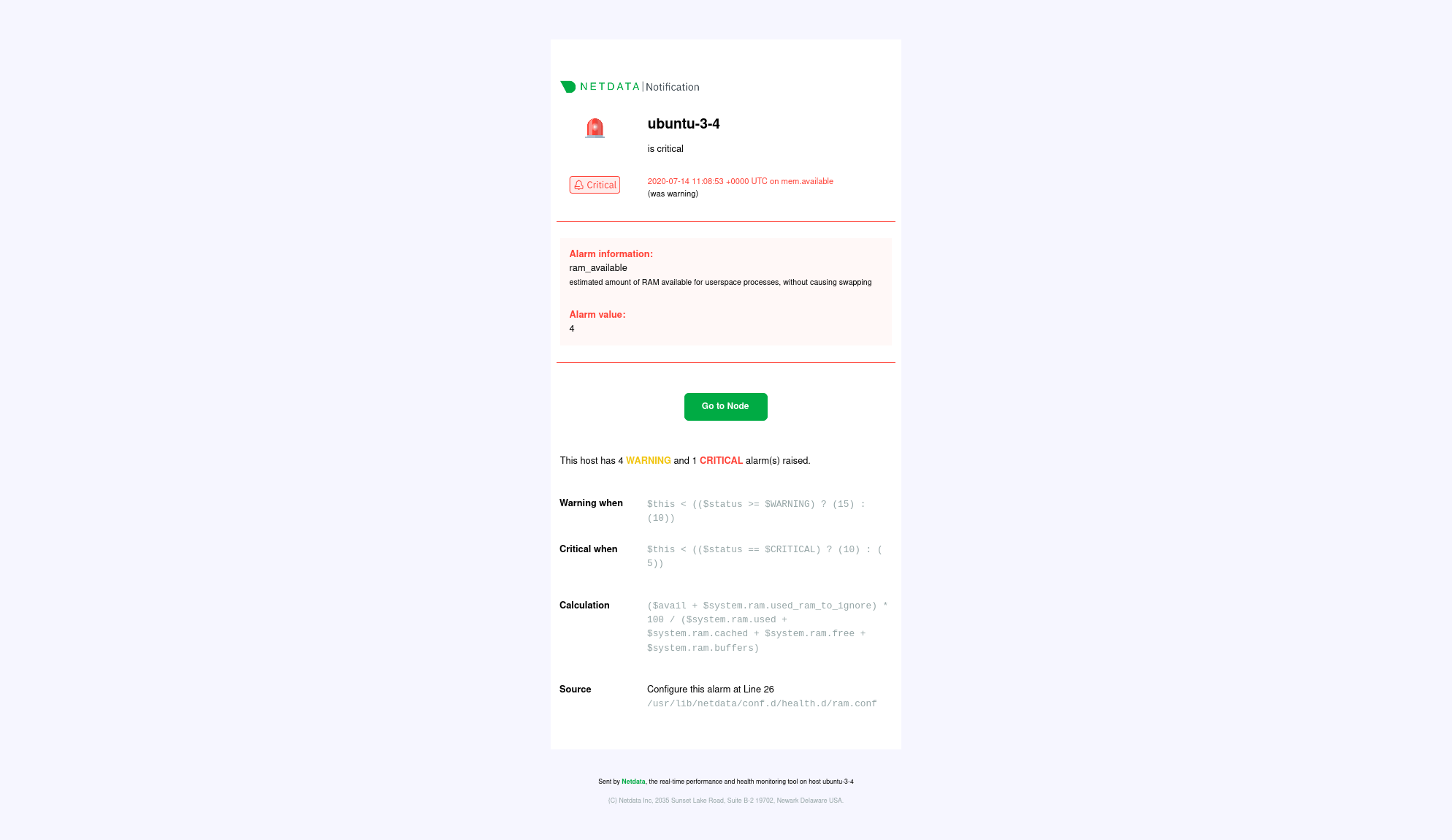

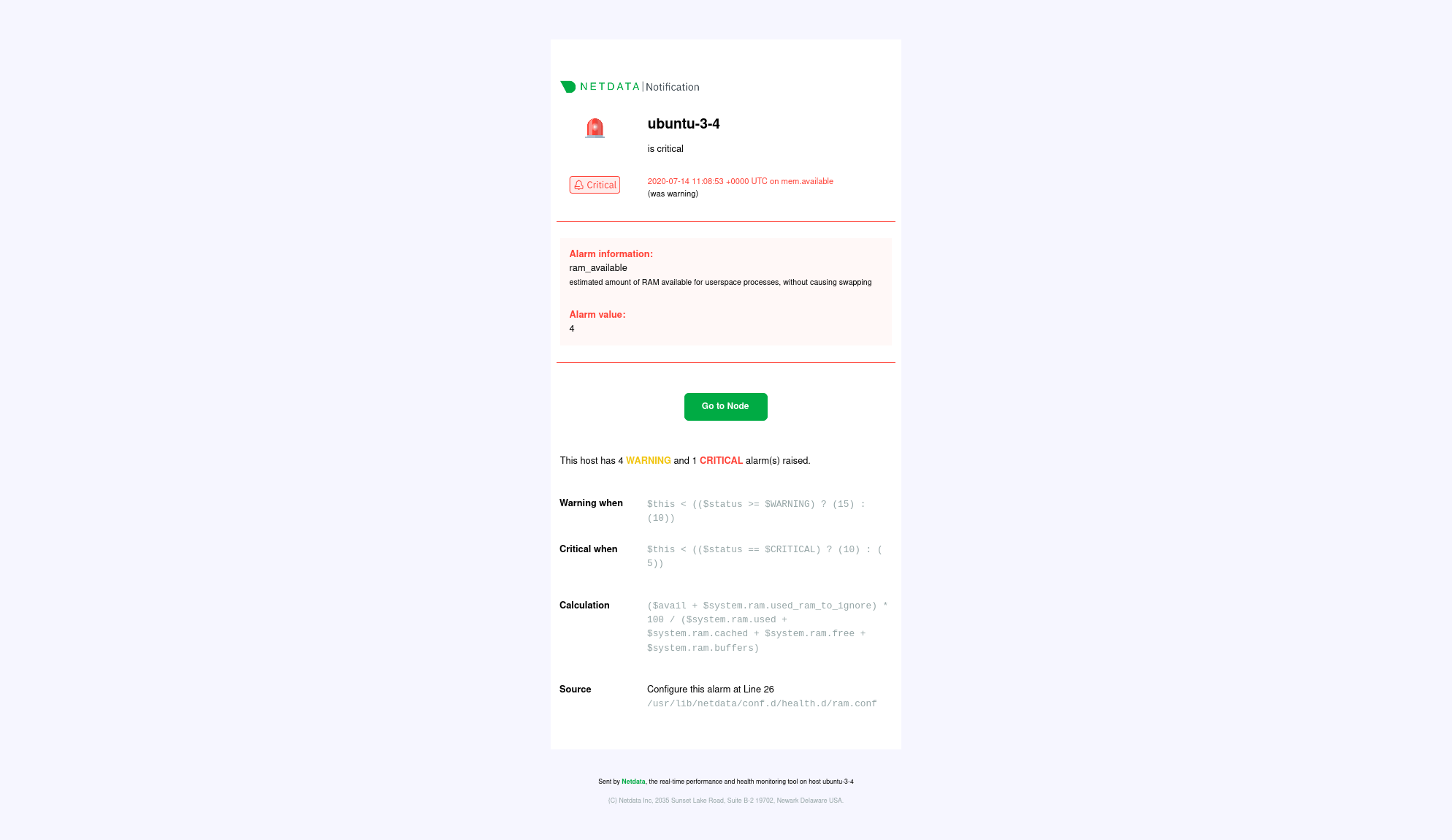

- **20+ notification platforms**: Netdata's health watchdog sends warning and critical alarms to your [favorite

|

||||

platform](https://learn.netdata.cloud/docs/monitor/enable-notifications) to inform you of anomalies just seconds

|

||||

platform](https://github.com/netdata/netdata/blob/master/docs/monitor/enable-notifications.md) to inform you of anomalies just seconds

|

||||

after they affect your node.

|

||||

- **30+ external time-series databases**: Export resampled metrics as they're collected to other [local- and

|

||||

Cloud-based databases](https://learn.netdata.cloud/docs/export/external-databases) for best-in-class

|

||||

Cloud-based databases](https://github.com/netdata/netdata/blob/master/docs/export/external-databases.md) for best-in-class

|

||||

interoperability.

|

||||

|

||||

> 💡 **Want to leverage the monitoring power of Netdata across entire infrastructure**? View metrics from

|

||||

> any number of distributed nodes in a single interface and unlock even more

|

||||

> [features](https://learn.netdata.cloud/docs/overview/why-netdata) with [Netdata

|

||||

> [features](https://github.com/netdata/netdata/blob/master/docs/overview/why-netdata.md) with [Netdata

|

||||

> Cloud](https://learn.netdata.cloud/docs/overview/what-is-netdata#netdata-cloud).

|

||||

|

||||

## Get Netdata

|

||||

|

|

@ -117,7 +117,7 @@ Netdata works with tons of applications, notifications platforms, and other time

|

|||

|

||||

### Infrastructure view

|

||||

|

||||

Due to the distributed nature of the Netdata ecosystem, it is recommended to setup not only one Netdata Agent on your production system, but also an additional Netdata Agent acting as a [Parent](https://learn.netdata.cloud/docs/agent/streaming). A local Netdata Agent (child), without any database or alarms, collects metrics and sends them to another Netdata Agent (parent). The same parent can collect data for any number of child nodes and serves as a centralized health check engine for each child by triggering alerts on their behalf.

|

||||

Due to the distributed nature of the Netdata ecosystem, it is recommended to setup not only one Netdata Agent on your production system, but also an additional Netdata Agent acting as a [Parent](https://github.com/netdata/netdata/blob/master/streaming/README.md). A local Netdata Agent (child), without any database or alarms, collects metrics and sends them to another Netdata Agent (parent). The same parent can collect data for any number of child nodes and serves as a centralized health check engine for each child by triggering alerts on their behalf.

|

||||

|

||||

|

||||

|

||||

|

|

@ -127,7 +127,7 @@ Community version is free to use forever. No restriction on number of nodes, clu

|

|||

|

||||

#### Claiming existing Agents

|

||||

|

||||

You can easily [connect (claim)](https://learn.netdata.cloud/docs/agent/claim) your existing Agents to the Cloud to unlock features for free and to find weaknesses before they turn into outages.

|

||||

You can easily [connect (claim)](https://github.com/netdata/netdata/blob/master/claim/README.md) your existing Agents to the Cloud to unlock features for free and to find weaknesses before they turn into outages.

|

||||

|

||||

### Single Node view

|

||||

|

||||

|

|

@ -138,7 +138,7 @@ installation script](https://learn.netdata.cloud/docs/agent/packaging/installer/

|

|||

and builds all dependencies, including those required to connect to [Netdata Cloud](https://netdata.cloud/cloud) if you

|

||||

choose, and enables [automatic nightly

|

||||

updates](https://learn.netdata.cloud/docs/agent/packaging/installer#nightly-vs-stable-releases) and [anonymous

|

||||

statistics](https://learn.netdata.cloud/docs/agent/anonymous-statistics).

|

||||

statistics](https://github.com/netdata/netdata/blob/master/docs/anonymous-statistics.md).

|

||||

<!-- candidate for reuse -->

|

||||

```bash

|

||||

wget -O /tmp/netdata-kickstart.sh https://my-netdata.io/kickstart.sh && sh /tmp/netdata-kickstart.sh

|

||||

|

|

@ -149,7 +149,7 @@ To view the Netdata dashboard, navigate to `http://localhost:19999`, or `http://

|

|||

### Docker

|

||||

|

||||

You can also try out Netdata's capabilities in a [Docker

|

||||

container](https://learn.netdata.cloud/docs/agent/packaging/docker/):

|

||||

container](https://github.com/netdata/netdata/blob/master/packaging/docker/README.md):

|

||||

|

||||

```bash

|

||||

docker run -d --name=netdata \

|

||||

|

|

@ -173,16 +173,16 @@ To view the Netdata dashboard, navigate to `http://localhost:19999`, or `http://

|

|||

### Other operating systems

|

||||

|

||||

See our documentation for [additional operating

|

||||

systems](/packaging/installer/README.md#have-a-different-operating-system-or-want-to-try-another-method), including

|

||||

[Kubernetes](/packaging/installer/methods/kubernetes.md), [`.deb`/`.rpm`

|

||||

packages](/packaging/installer/methods/kickstart.md#native-packages), and more.

|

||||

systems](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md#have-a-different-operating-system-or-want-to-try-another-method), including

|

||||

[Kubernetes](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kubernetes.md), [`.deb`/`.rpm`

|

||||

packages](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kickstart.md#native-packages), and more.

|

||||

|

||||

### Post-installation

|

||||

|

||||

When you're finished with installation, check out our [single-node](/docs/quickstart/single-node.md) or

|

||||

[infrastructure](/docs/quickstart/infrastructure.md) monitoring quickstart guides based on your use case.

|

||||

When you're finished with installation, check out our [single-node](https://github.com/netdata/netdata/blob/master/docs/quickstart/single-node.md) or

|

||||

[infrastructure](https://github.com/netdata/netdata/blob/master/docs/quickstart/infrastructure.md) monitoring quickstart guides based on your use case.

|

||||

|

||||

Or, skip straight to [configuring the Netdata Agent](/docs/configure/nodes.md).

|

||||

Or, skip straight to [configuring the Netdata Agent](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md).

|

||||

|

||||

Read through Netdata's [documentation](https://learn.netdata.cloud/docs), which is structured based on actions and

|

||||

solutions, to enable features like health monitoring, alarm notifications, long-term metrics storage, exporting to

|

||||

|

|

@ -215,7 +215,7 @@ to collect metrics, troubleshoot via charts, export to external databases, and m

|

|||

|

||||

## Community

|

||||

|

||||

Netdata is an inclusive open-source project and community. Please read our [Code of Conduct](https://learn.netdata.cloud/contribute/code-of-conduct).

|

||||

Netdata is an inclusive open-source project and community. Please read our [Code of Conduct](https://github.com/netdata/.github/blob/main/CODE_OF_CONDUCT.md).

|

||||

|

||||

Find most of the Netdata team in our [community forums](https://community.netdata.cloud). It's the best place to

|

||||

ask questions, find resources, and engage with passionate professionals. The team is also available and active in our [Discord](https://discord.com/invite/mPZ6WZKKG2) too.

|

||||

|

|

@ -235,18 +235,18 @@ You can also find Netdata on:

|

|||

|

||||

Contributions are the lifeblood of open-source projects. While we continue to invest in and improve Netdata, we need help to democratize monitoring!

|

||||

|

||||

- Read our [Contributing Guide](https://learn.netdata.cloud/contribute/handbook), which contains all the information you need to contribute to Netdata, such as improving our documentation, engaging in the community, and developing new features. We've made it as frictionless as possible, but if you need help, just ping us on our community forums!

|

||||

- Read our [Contributing Guide](https://github.com/netdata/.github/blob/main/CONTRIBUTING.md), which contains all the information you need to contribute to Netdata, such as improving our documentation, engaging in the community, and developing new features. We've made it as frictionless as possible, but if you need help, just ping us on our community forums!

|

||||

- We have a whole category dedicated to contributing and extending Netdata on our [community forums](https://community.netdata.cloud/c/agent-development/9)

|

||||

- Found a bug? Open a [GitHub issue](https://github.com/netdata/netdata/issues/new?assignees=&labels=bug%2Cneeds+triage&template=BUG_REPORT.yml&title=%5BBug%5D%3A+).

|

||||

- View our [Security Policy](https://github.com/netdata/netdata/security/policy).

|

||||

|

||||

Package maintainers should read the guide on [building Netdata from source](/packaging/installer/methods/source.md) for

|

||||

Package maintainers should read the guide on [building Netdata from source](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/source.md) for

|

||||

instructions on building each Netdata component from source and preparing a package.

|

||||

|

||||

## License

|

||||

|

||||

The Netdata Agent is [GPLv3+](/LICENSE). Netdata re-distributes other open-source tools and libraries. Please check the

|

||||

[third party licenses](/REDISTRIBUTED.md).

|

||||

The Netdata Agent is [GPLv3+](https://github.com/netdata/netdata/blob/master/LICENSE). Netdata re-distributes other open-source tools and libraries. Please check the

|

||||

[third party licenses](https://github.com/netdata/netdata/blob/master/REDISTRIBUTED.md).

|

||||

|

||||

## Is it any good?

|

||||

|

||||

|

|

|

|||

|

|

@ -29,8 +29,8 @@ this is not an option in your case always verify the current domain resolution (

|

|||

:::

|

||||

|

||||

For a guide to connecting a node using the ACLK, plus additional troubleshooting and reference information, read our [get

|

||||

started with Cloud](https://learn.netdata.cloud/docs/cloud/get-started) guide or the full [connect to Cloud

|

||||

documentation](/claim/README.md).

|

||||

started with Cloud](https://github.com/netdata/netdata/blob/master/docs/cloud/get-started.mdx) guide or the full [connect to Cloud

|

||||

documentation](https://github.com/netdata/netdata/blob/master/claim/README.md).

|

||||

|

||||

## Data privacy

|

||||

[Data privacy](https://netdata.cloud/privacy/) is very important to us. We firmly believe that your data belongs to

|

||||

|

|

@ -41,7 +41,7 @@ The data passes through our systems, but it isn't stored.

|

|||

|

||||

However, to be able to offer the stunning visualizations and advanced functionality of Netdata Cloud, it does store a limited number of _metadata_.

|

||||

|

||||

Read more about [Data privacy in the Netdata Cloud](https://learn.netdata.cloud/docs/cloud/data-privacy) in the documentation.

|

||||

Read more about [Data privacy in the Netdata Cloud](https://github.com/netdata/netdata/blob/master/docs/cloud/data-privacy.mdx) in the documentation.

|

||||

|

||||

|

||||

## Enable and configure the ACLK

|

||||

|

|

@ -57,7 +57,7 @@ configuration uses two settings:

|

|||

```

|

||||

|

||||

If your Agent needs to use a proxy to access the internet, you must [set up a proxy for

|

||||

connecting to cloud](/claim/README.md#connect-through-a-proxy).

|

||||

connecting to cloud](https://github.com/netdata/netdata/blob/master/claim/README.md#connect-through-a-proxy).

|

||||

|

||||

You can configure following keys in the `netdata.conf` section `[cloud]`:

|

||||

```

|

||||

|

|

@ -76,8 +76,8 @@ You have two options if you prefer to disable the ACLK and not use Netdata Cloud

|

|||

### Disable at installation

|

||||

|

||||

You can pass the `--disable-cloud` parameter to the Agent installation when using a kickstart script

|

||||

([kickstart.sh](/packaging/installer/methods/kickstart.md), or a [manual installation from

|

||||

Git](/packaging/installer/methods/manual.md).

|

||||

([kickstart.sh](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kickstart.md), or a [manual installation from

|

||||

Git](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/manual.md).

|

||||

|

||||

When you pass this parameter, the installer does not download or compile any extra libraries. Once running, the Agent

|

||||

kills the thread responsible for the ACLK and connecting behavior, and behaves as though the ACLK, and thus Netdata Cloud,

|

||||

|

|

@ -131,12 +131,12 @@ Restart your Agent to disable the ACLK.

|

|||

### Re-enable the ACLK

|

||||

|

||||

If you first disable the ACLK and any Cloud functionality and then decide you would like to use Cloud, you must either

|

||||

[reinstall Netdata](/packaging/installer/REINSTALL.md) with Cloud enabled or change the runtime setting in your

|

||||

[reinstall Netdata](https://github.com/netdata/netdata/blob/master/packaging/installer/REINSTALL.md) with Cloud enabled or change the runtime setting in your

|

||||

`cloud.conf` file.

|

||||

|

||||

If you passed `--disable-cloud` to `netdata-installer.sh` during installation, you must

|

||||

[reinstall](/packaging/installer/REINSTALL.md) your Agent. Use the same method as before, but pass `--require-cloud` to

|

||||

the installer. When installation finishes you can [connect your node](/claim/README.md#how-to-connect-a-node).

|

||||

[reinstall](https://github.com/netdata/netdata/blob/master/packaging/installer/REINSTALL.md) your Agent. Use the same method as before, but pass `--require-cloud` to

|

||||

the installer. When installation finishes you can [connect your node](https://github.com/netdata/netdata/blob/master/claim/README.md#how-to-connect-a-node).

|

||||

|

||||

If you changed the runtime setting in your `var/lib/netdata/cloud.d/cloud.conf` file, edit the file again and change

|

||||

`enabled` to `yes`:

|

||||

|

|

@ -146,6 +146,6 @@ If you changed the runtime setting in your `var/lib/netdata/cloud.d/cloud.conf`

|

|||

enabled = yes

|

||||

```

|

||||

|

||||

Restart your Agent and [connect your node](/claim/README.md#how-to-connect-a-node).

|

||||

Restart your Agent and [connect your node](https://github.com/netdata/netdata/blob/master/claim/README.md#how-to-connect-a-node).

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -12,10 +12,10 @@ learn_rel_path: "Setup"

|

|||

|

||||

You can securely connect a Netdata Agent, running on a distributed node, to Netdata Cloud. A Space's

|

||||

administrator creates a **claiming token**, which is used to add an Agent to their Space via the [Agent-Cloud link

|

||||

(ACLK)](/aclk/README.md).

|

||||

(ACLK)](https://github.com/netdata/netdata/blob/master/aclk/README.md).

|

||||

|

||||

Are you just starting out with Netdata Cloud? See our [get started with

|

||||

Cloud](https://learn.netdata.cloud/docs/cloud/get-started) guide for a walkthrough of the process and simplified

|

||||

Cloud](https://github.com/netdata/netdata/blob/master/docs/cloud/cloud.mdx) guide for a walkthrough of the process and simplified

|

||||

instructions.

|

||||

|

||||

When connecting an agent (also referred to as a node) to Netdata Cloud, you must complete a verification process that proves you have some level of authorization to manage the node itself. This verification is a security feature that helps prevent unauthorized users from seeing the data on your node.

|

||||

|

|

@ -26,13 +26,13 @@ Netdata Cloud.

|

|||

> The connection process ensures no third party can add your node, and then view your node's metrics, in a Cloud account,

|

||||

> Space, or War Room that you did not authorize.

|

||||

|

||||

By connecting a node, you opt-in to sending data from your Agent to Netdata Cloud via the [ACLK](/aclk/README.md). This

|

||||

By connecting a node, you opt-in to sending data from your Agent to Netdata Cloud via the [ACLK](https://github.com/netdata/netdata/blob/master/aclk/README.md). This

|

||||

data is encrypted by TLS while it is in transit. We use the RSA keypair created during the connection process to authenticate the

|

||||

identity of the Netdata Agent when it connects to the Cloud. While the data does flow through Netdata Cloud servers on its way

|

||||

from Agents to the browser, we do not store or log it.

|

||||

|

||||

You can connect a node during the Netdata Cloud onboarding process, or after you created a Space by clicking on **Connect

|

||||

Nodes** in the [Spaces management area](https://learn.netdata.cloud/docs/cloud/spaces#manage-spaces).

|

||||

Nodes** in the [Spaces management area](https://github.com/netdata/netdata/blob/master/docs/cloud/cloud.mdx#manage-spaces).

|

||||

|

||||

There are two important notes regarding connecting nodes:

|

||||

|

||||

|

|

@ -46,7 +46,7 @@ There will be three main flows from where you might want to connect a node to Ne

|

|||

* when you are on an [

|

||||

War Room](#empty-war-room) and you want to connect your first node

|

||||

* when you are at the [Manage Space](#manage-space-or-war-room) area and you select **Connect Nodes** to connect a node, coming from Manage Space or Manage War Room

|

||||

* when you are on the [Nodes view page](https://learn.netdata.cloud/docs/cloud/visualize/nodes) and want to connect a node - this process falls into the [Manage Space](#manage-space-or-war-room) flow

|

||||

* when you are on the [Nodes view page](https://github.com/netdata/netdata/blob/master/docs/cloud/visualize/nodes.md) and want to connect a node - this process falls into the [Manage Space](#manage-space-or-war-room) flow

|

||||

|

||||

Please note that only the administrators of a Space in Netdata Cloud can view the claiming token and accompanying script, generated by Netdata Cloud, to trigger the connection process.

|

||||

|

||||

|

|

@ -70,11 +70,11 @@ finished onboarding.

|

|||

To connect a node, select which War Rooms you want to add this node to with the dropdown, then copy and paste the script

|

||||

given by Netdata Cloud into your node's terminal.

|

||||

|

||||

When coming from [Nodes view page](https://learn.netdata.cloud/docs/cloud/visualize/nodes) the room parameter is already defined to current War Room.

|

||||

When coming from [Nodes view page](https://github.com/netdata/netdata/blob/master/docs/cloud/visualize/nodes.md) the room parameter is already defined to current War Room.

|

||||

|

||||

### Connect an agent running in Linux

|

||||

|

||||

If you want to connect a node that is running on a Linux environment, the script that will be provided to you by Netdata Cloud is the [kickstart](/packaging/installer/README.md#automatic-one-line-installation-script) which will install the Netdata Agent on your node, if it isn't already installed, and connect the node to Netdata Cloud. It should be similar to:

|

||||

If you want to connect a node that is running on a Linux environment, the script that will be provided to you by Netdata Cloud is the [kickstart](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md#automatic-one-line-installation-script) which will install the Netdata Agent on your node, if it isn't already installed, and connect the node to Netdata Cloud. It should be similar to:

|

||||

|

||||

```

|

||||

wget -O /tmp/netdata-kickstart.sh https://my-netdata.io/kickstart.sh && sh /tmp/netdata-kickstart.sh --claim-token TOKEN --claim-rooms ROOM1,ROOM2 --claim-url https://api.netdata.cloud

|

||||

|

|

@ -84,7 +84,7 @@ the node in your Space after 60 seconds, see the [troubleshooting information](#

|

|||

|

||||

Please note that to run it you will either need to have root privileges or run it with the user that is running the agent, more details on the [Connect an agent without root privileges](#connect-an-agent-without-root-privileges) section.

|

||||

|

||||

For more details on what are the extra parameters `claim-token`, `claim-rooms` and `claim-url` please refer to [Connect node to Netdata Cloud during installation](/packaging/installer/methods/kickstart.md#connect-node-to-netdata-cloud-during-installation).

|

||||

For more details on what are the extra parameters `claim-token`, `claim-rooms` and `claim-url` please refer to [Connect node to Netdata Cloud during installation](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kickstart.md#connect-node-to-netdata-cloud-during-installation).

|

||||

|

||||

### Connect an agent without root privileges

|

||||

|

||||

|

|

@ -118,7 +118,7 @@ connected on startup or restart.

|

|||

|

||||

For the connection process to work, the contents of `/var/lib/netdata` _must_ be preserved across container

|

||||

restarts using a persistent volume. See our [recommended `docker run` and Docker Compose

|

||||

examples](/packaging/docker/README.md#create-a-new-netdata-agent-container) for details.

|

||||

examples](https://github.com/netdata/netdata/blob/master/packaging/docker/README.md#create-a-new-netdata-agent-container) for details.

|

||||

|

||||

#### Known issues on older hosts with seccomp enabled

|

||||

|

||||

|

|

@ -289,7 +289,7 @@ you don't see the node in your Space after 60 seconds, see the [troubleshooting

|

|||

|

||||

### Connect an agent running in macOS

|

||||

|

||||

To connect a node that is running on a macOS environment the script that will be provided to you by Netdata Cloud is the [kickstart](/packaging/installer/methods/macos.md#install-netdata-with-our-automatic-one-line-installation-script) which will install the Netdata Agent on your node, if it isn't already installed, and connect the node to Netdata Cloud. It should be similar to:

|

||||

To connect a node that is running on a macOS environment the script that will be provided to you by Netdata Cloud is the [kickstart](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/macos.md#install-netdata-with-our-automatic-one-line-installation-script) which will install the Netdata Agent on your node, if it isn't already installed, and connect the node to Netdata Cloud. It should be similar to:

|

||||

|

||||

```bash

|

||||

curl https://my-netdata.io/kickstart.sh > /tmp/netdata-kickstart.sh && sh /tmp/netdata-kickstart.sh --install-prefix /usr/local/ --claim-token TOKEN --claim-rooms ROOM1,ROOM2 --claim-url https://api.netdata.cloud

|

||||

|

|

@ -299,7 +299,7 @@ the node in your Space after 60 seconds, see the [troubleshooting information](#

|

|||

|

||||

### Connect a Kubernetes cluster's parent Netdata pod

|

||||

|

||||

Read our [Kubernetes installation](/packaging/installer/methods/kubernetes.md#connect-your-kubernetes-cluster-to-netdata-cloud)

|

||||

Read our [Kubernetes installation](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kubernetes.md#connect-your-kubernetes-cluster-to-netdata-cloud)

|

||||

for details on connecting a parent Netdata pod.

|

||||

|

||||

### Connect through a proxy

|

||||

|

|

@ -328,7 +328,7 @@ For example, a HTTP proxy setting may look like the following:

|

|||

proxy = http://proxy.example.com:1080 # With a URL

|

||||

```

|

||||

|

||||

You can now move on to connecting. When you connect with the [kickstart](/packaging/installer/README.md#automatic-one-line-installation-script) script, add the `--claim-proxy=` parameter and

|

||||

You can now move on to connecting. When you connect with the [kickstart](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md#automatic-one-line-installation-script) script, add the `--claim-proxy=` parameter and

|

||||

append the same proxy setting you added to `netdata.conf`.

|

||||

|

||||

```bash

|

||||

|

|

@ -340,7 +340,7 @@ you don't see the node in your Space after 60 seconds, see the [troubleshooting

|

|||

|

||||

### Troubleshooting

|

||||

|

||||

If you're having trouble connecting a node, this may be because the [ACLK](/aclk/README.md) cannot connect to Cloud.

|

||||

If you're having trouble connecting a node, this may be because the [ACLK](https://github.com/netdata/netdata/blob/master/aclk/README.md) cannot connect to Cloud.

|

||||

|

||||

With the Netdata Agent running, visit `http://NODE:19999/api/v1/info` in your browser, replacing `NODE` with the IP

|

||||

address or hostname of your Agent. The returned JSON contains four keys that will be helpful to diagnose any issues you

|

||||

|

|

@ -373,7 +373,7 @@ If you run the kickstart script and get the following error `Existing install ap

|

|||

|

||||

If you are using an unsupported package, such as a third-party `.deb`/`.rpm` package provided by your distribution,

|

||||

please remove that package and reinstall using our [recommended kickstart

|

||||

script](/docs/get-started.mdx#install-on-linux-with-one-line-installer).

|

||||

script](https://github.com/netdata/netdata/blob/master/docs/get-started.mdx#install-on-linux-with-one-line-installer).

|

||||

|

||||

#### kickstart: Failed to write new machine GUID

|

||||

|

||||

|

|

@ -393,7 +393,7 @@ if you installed Netdata to `/opt/netdata`, use `/opt/netdata/bin/netdata-claim.

|

|||

|

||||

If you are using an unsupported package, such as a third-party `.deb`/`.rpm` package provided by your distribution,

|

||||

please remove that package and reinstall using our [recommended kickstart

|

||||

script](/docs/get-started.mdx#install-on-linux-with-one-line-installer).

|

||||

script](https://github.com/netdata/netdata/blob/master/docs/get-started.mdx#install-on-linux-with-one-line-installer).

|

||||

|

||||

#### Connecting on older distributions (Ubuntu 14.04, Debian 8, CentOS 6)

|

||||

|

||||

|

|

@ -402,7 +402,7 @@ If you're running an older Linux distribution or one that has reached EOL, such

|

|||

versions of OpenSSL cannot perform [hostname validation](https://wiki.openssl.org/index.php/Hostname_validation), which

|

||||

helps securely encrypt SSL connections.

|

||||

|

||||

We recommend you reinstall Netdata with a [static build](/packaging/installer/methods/kickstart.md#static-builds), which uses an

|

||||

We recommend you reinstall Netdata with a [static build](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kickstart.md#static-builds), which uses an

|

||||

up-to-date version of OpenSSL with hostname validation enabled.

|

||||

|

||||

If you choose to continue using the outdated version of OpenSSL, your node will still connect to Netdata Cloud, albeit

|

||||

|

|

@ -420,7 +420,7 @@ Additionally, check that the `enabled` setting in `var/lib/netdata/cloud.d/cloud

|

|||

enabled = true

|

||||

```

|

||||

|

||||

To fix this issue, reinstall Netdata using your [preferred method](/packaging/installer/README.md) and do not add the

|

||||

To fix this issue, reinstall Netdata using your [preferred method](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md) and do not add the

|

||||

`--disable-cloud` option.

|

||||

|

||||

#### cloud-available is false / ACLK Available: No

|

||||

|

|

@ -510,20 +510,20 @@ tool, and details about the files found in `cloud.d`.

|

|||

|

||||

### The `cloud.conf` file

|

||||

|

||||

This section defines how and whether your Agent connects to [Netdata Cloud](https://learn.netdata.cloud/docs/cloud/)

|

||||

using the [ACLK](/aclk/README.md).

|

||||

This section defines how and whether your Agent connects to [Netdata Cloud](https://github.com/netdata/netdata/blob/master/docs/cloud/cloud.mdx)

|

||||

using the [ACLK](https://github.com/netdata/netdata/blob/master/aclk/README.md).

|

||||

|

||||

| setting | default | info |

|

||||

|:-------------- |:------------------------- |:-------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| cloud base url | https://api.netdata.cloud | The URL for the Netdata Cloud web application. You should not change this. If you want to disable Cloud, change the `enabled` setting. |

|

||||

| enabled | yes | The runtime option to disable the [Agent-Cloud link](/aclk/README.md) and prevent your Agent from connecting to Netdata Cloud. |

|

||||

| enabled | yes | The runtime option to disable the [Agent-Cloud link](https://github.com/netdata/netdata/blob/master/aclk/README.md) and prevent your Agent from connecting to Netdata Cloud. |

|

||||

|

||||

### kickstart script

|

||||

|

||||

The best way to install Netdata and connect your nodes to Netdata Cloud is with our automatic one-line installation script, [kickstart](/packaging/installer/README.md#automatic-one-line-installation-script). This script will install the Netdata Agent, in case it isn't already installed, and connect your node to Netdata Cloud.

|

||||

The best way to install Netdata and connect your nodes to Netdata Cloud is with our automatic one-line installation script, [kickstart](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md#automatic-one-line-installation-script). This script will install the Netdata Agent, in case it isn't already installed, and connect your node to Netdata Cloud.

|

||||

|

||||

This works with:

|

||||

* most Linux distributions, see [Netdata's platform support policy](/packaging/PLATFORM_SUPPORT.md)

|

||||

* most Linux distributions, see [Netdata's platform support policy](https://github.com/netdata/netdata/blob/master/packaging/PLATFORM_SUPPORT.md)

|

||||

* macOS

|

||||

|

||||

For details on how to run this script please check [How to connect a node](#how-to-connect-a-node) and choose your environment.

|

||||

|

|

@ -578,7 +578,7 @@ netdatacli reload-claiming-state

|

|||

|

||||

This reloads the Agent connection state from disk.

|

||||

|

||||

Our recommendation is to trigger the connection process using the [kickstart](/packaging/installer/README.md#automatic-one-line-installation-script) whenever possible.

|

||||

Our recommendation is to trigger the connection process using the [kickstart](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md#automatic-one-line-installation-script) whenever possible.

|

||||

|

||||

### Netdata Agent command line

|

||||

|

||||

|

|

|

|||

|

|

@ -39,6 +39,6 @@ aclk-state [json]

|

|||

Returns current state of ACLK and Cloud connection. (optionally in json)

|

||||

```

|

||||

|

||||

Those commands are the same that can be sent to netdata via [signals](/daemon/README.md#command-line-options).

|

||||

Those commands are the same that can be sent to netdata via [signals](https://github.com/netdata/netdata/blob/master/daemon/README.md#command-line-options).

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -14,16 +14,19 @@ Netdata uses collectors to help you gather metrics from your favorite applicatio

|

|||

real-time, interactive charts. The following list includes collectors for both external services/applications and

|

||||

internal system metrics.

|

||||

|

||||

Learn more about [how collectors work](/docs/collect/how-collectors-work.md), and then learn how to [enable or

|

||||

configure](/docs/collect/enable-configure.md) any of the below collectors using the same process.

|

||||

Learn more

|

||||

about [how collectors work](https://github.com/netdata/netdata/blob/master/docs/collect/how-collectors-work.md), and

|

||||

then learn how to [enable or

|

||||

configure](https://github.com/netdata/netdata/blob/master/docs/collect/enable-configure.md) any of the below collectors using the same process.

|

||||

|

||||

Some collectors have both Go and Python versions as we continue our effort to migrate all collectors to Go. In these

|

||||

cases, _Netdata always prioritizes the Go version_, and we highly recommend you use the Go versions for the best

|

||||

experience.

|

||||

|

||||

If you want to use a Python version of a collector, you need to explicitly [disable the Go

|

||||

version](/docs/collect/enable-configure.md), and enable the Python version. Netdata then skips the Go version and

|

||||

attempts to load the Python version and its accompanying configuration file.

|

||||

If you want to use a Python version of a collector, you need to

|

||||

explicitly [disable the Go version](https://github.com/netdata/netdata/blob/masterhttps://github.com/netdata/netdata/blob/master/docs/collect/enable-configure.md),

|

||||

and enable the Python version. Netdata then skips the Go version and attempts to load the Python version and its

|

||||

accompanying configuration file.

|

||||

|

||||

If you don't see the app/service you'd like to monitor in this list:

|

||||

|

||||

|

|

@ -33,7 +36,7 @@ If you don't see the app/service you'd like to monitor in this list:

|

|||

a [feature request](https://github.com/netdata/netdata/issues/new/choose) on GitHub.

|

||||

- If you have basic software development skills, you can add your own plugin

|

||||

in [Go](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin#how-to-develop-a-collector)

|

||||

or [Python](https://learn.netdata.cloud/guides/python-collector)

|

||||

or [Python](https://github.com/netdata/netdata/blob/master/docs/guides/python-collector.md)

|

||||

|

||||

Supported Collectors List:

|

||||

|

||||

|

|

@ -76,256 +79,300 @@ configure any of these collectors according to your setup and infrastructure.

|

|||

|

||||

### Generic

|

||||

|

||||

- [Prometheus endpoints](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/prometheus): Gathers

|

||||

- [Prometheus endpoints](https://github.com/netdata/go.d.plugin/blob/master/modules/prometheus/README.md): Gathers

|

||||

metrics from any number of Prometheus endpoints, with support to autodetect more than 600 services and applications.

|

||||

- [Pandas](https://learn.netdata.cloud/docs/agent/collectors/python.d.plugin/pandas): A Python collector that gathers

|

||||

metrics from a [pandas](https://pandas.pydata.org/) dataframe. Pandas is a high level data processing library in

|

||||

Python that can read various formats of data from local files or web endpoints. Custom processing and transformation

|

||||

- [Pandas](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/pandas/README.md): A Python

|

||||

collector that gathers

|

||||

metrics from a [pandas](https://pandas.pydata.org/) dataframe. Pandas is a high level data processing library in

|

||||

Python that can read various formats of data from local files or web endpoints. Custom processing and transformation

|

||||

logic can also be expressed as part of the collector configuration.

|

||||

|

||||

### APM (application performance monitoring)

|

||||

|

||||

- [Go applications](/collectors/python.d.plugin/go_expvar/README.md): Monitor any Go application that exposes its

|

||||

- [Go applications](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/go_expvar/README.md):

|

||||

Monitor any Go application that exposes its

|

||||

metrics with the `expvar` package from the Go standard library.

|

||||

- [Java Spring Boot 2

|

||||

applications](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/springboot2/):

|

||||

- [Java Spring Boot 2 applications](https://github.com/netdata/go.d.plugin/blob/master/modules/springboot2/README.md):

|

||||

Monitor running Java Spring Boot 2 applications that expose their metrics with the use of the Spring Boot Actuator.

|

||||

- [statsd](/collectors/statsd.plugin/README.md): Implement a high performance `statsd` server for Netdata.

|

||||

- [phpDaemon](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/phpdaemon/): Collect worker

|

||||

- [statsd](https://github.com/netdata/netdata/blob/master/collectors/statsd.plugin/README.md): Implement a high

|

||||

performance `statsd` server for Netdata.

|

||||

- [phpDaemon](https://github.com/netdata/go.d.plugin/blob/master/modules/phpdaemon/README.md): Collect worker

|

||||

statistics (total, active, idle), and uptime for web and network applications.

|

||||

- [uWSGI](/collectors/python.d.plugin/uwsgi/README.md): Monitor performance metrics exposed by the uWSGI Stats

|

||||

- [uWSGI](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/uwsgi/README.md): Monitor

|

||||

performance metrics exposed by the uWSGI Stats

|

||||

Server.

|

||||

|

||||

### Containers and VMs

|

||||

|

||||

- [Docker containers](/collectors/cgroups.plugin/README.md): Monitor the health and performance of individual Docker

|

||||

containers using the cgroups collector plugin.

|

||||

- [DockerD](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/docker/): Collect container health statistics.

|

||||

- [Docker Engine](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/docker_engine/): Collect

|

||||

- [Docker containers](https://github.com/netdata/netdata/blob/master/collectors/cgroups.plugin/README.md): Monitor the

|

||||

health and performance of individual Docker containers using the cgroups collector plugin.

|

||||

- [DockerD](https://github.com/netdata/go.d.plugin/blob/master/modules/docker/README.md): Collect container health

|

||||

statistics.

|

||||

- [Docker Engine](https://github.com/netdata/go.d.plugin/blob/master/modules/docker_engine/README.md): Collect

|

||||

runtime statistics from the `docker` daemon using the `metrics-address` feature.

|

||||

- [Docker Hub](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/dockerhub/): Collect statistics

|

||||

- [Docker Hub](https://github.com/netdata/go.d.plugin/blob/master/modules/dockerhub/README.md): Collect statistics

|

||||

about Docker repositories, such as pulls, starts, status, time since last update, and more.

|

||||

- [Libvirt](/collectors/cgroups.plugin/README.md): Monitor the health and performance of individual Libvirt containers

|

||||

- [Libvirt](https://github.com/netdata/netdata/blob/master/collectors/cgroups.plugin/README.md): Monitor the health and

|

||||

performance of individual Libvirt containers

|

||||

using the cgroups collector plugin.

|

||||

- [LXC](/collectors/cgroups.plugin/README.md): Monitor the health and performance of individual LXC containers using

|

||||

- [LXC](https://github.com/netdata/netdata/blob/master/collectors/cgroups.plugin/README.md): Monitor the health and

|

||||

performance of individual LXC containers using

|

||||

the cgroups collector plugin.

|

||||

- [LXD](/collectors/cgroups.plugin/README.md): Monitor the health and performance of individual LXD containers using

|

||||

- [LXD](https://github.com/netdata/netdata/blob/master/collectors/cgroups.plugin/README.md): Monitor the health and

|

||||

performance of individual LXD containers using

|

||||

the cgroups collector plugin.

|

||||

- [systemd-nspawn](/collectors/cgroups.plugin/README.md): Monitor the health and performance of individual

|

||||

- [systemd-nspawn](https://github.com/netdata/netdata/blob/master/collectors/cgroups.plugin/README.md): Monitor the

|

||||

health and performance of individual

|

||||

systemd-nspawn containers using the cgroups collector plugin.

|

||||

- [vCenter Server Appliance](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/vcsa/): Monitor

|

||||

- [vCenter Server Appliance](https://github.com/netdata/go.d.plugin/blob/master/modules/vcsa/README.md): Monitor

|

||||

appliance system, components, and software update health statuses via the Health API.

|

||||

- [vSphere](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/vsphere/): Collect host and virtual

|

||||

- [vSphere](https://github.com/netdata/go.d.plugin/blob/master/modules/vsphere/README.md): Collect host and virtual

|

||||

machine performance metrics.

|

||||

- [Xen/XCP-ng](/collectors/xenstat.plugin/README.md): Collect XenServer and XCP-ng metrics using `libxenstat`.

|

||||

- [Xen/XCP-ng](https://github.com/netdata/netdata/blob/master/collectors/xenstat.plugin/README.md): Collect XenServer

|

||||

and XCP-ng metrics using `libxenstat`.

|

||||

|

||||

### Data stores

|

||||

|

||||

- [CockroachDB](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/cockroachdb/): Monitor various

|

||||

- [CockroachDB](https://github.com/netdata/go.d.plugin/blob/master/modules/cockroachdb/README.md): Monitor various

|

||||

database components using `_status/vars` endpoint.

|

||||

- [Consul](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/consul/): Capture service and unbound

|

||||

- [Consul](https://github.com/netdata/go.d.plugin/blob/master/modules/consul/README.md): Capture service and unbound

|

||||

checks status (passing, warning, critical, maintenance).

|

||||

- [Couchbase](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/couchbase/): Gather per-bucket

|

||||

- [Couchbase](https://github.com/netdata/go.d.plugin/blob/master/modules/couchbase/README.md): Gather per-bucket

|

||||

metrics from any number of instances of the distributed JSON document database.

|

||||

- [CouchDB](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/couchdb): Monitor database health and

|

||||

- [CouchDB](https://github.com/netdata/go.d.plugin/blob/master/modules/couchdb/README.md): Monitor database health and

|

||||

performance metrics

|

||||

(reads/writes, HTTP traffic, replication status, etc).

|

||||

- [MongoDB](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/mongodb): Collect server, database,

|

||||

- [MongoDB](https://github.com/netdata/go.d.plugin/blob/master/modules/mongodb/README.md): Collect server, database,

|

||||

replication and sharding performance and health metrics.

|

||||

- [MySQL](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/mysql/): Collect database global,

|

||||

- [MySQL](https://github.com/netdata/go.d.plugin/blob/master/modules/mysql/README.md): Collect database global,

|

||||

replication and per user statistics.

|

||||

- [OracleDB](/collectors/python.d.plugin/oracledb/README.md): Monitor database performance and health metrics.

|

||||

- [Pika](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/pika/): Gather metric, such as clients,

|

||||

- [OracleDB](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/oracledb/README.md): Monitor

|

||||

database performance and health metrics.

|

||||

- [Pika](https://github.com/netdata/go.d.plugin/blob/master/modules/pika/README.md): Gather metric, such as clients,

|

||||

memory usage, queries, and more from the Redis interface-compatible database.

|

||||

- [Postgres](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/postgres): Collect database health

|

||||

- [Postgres](https://github.com/netdata/go.d.plugin/blob/master/modules/postgres/README.md): Collect database health

|

||||

and performance metrics.

|

||||

- [ProxySQL](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/proxysql): Monitor database backend

|

||||

- [ProxySQL](https://github.com/netdata/go.d.plugin/blob/master/modules/proxysql/README.md): Monitor database backend

|

||||

and frontend performance metrics.

|

||||

- [Redis](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/redis/): Monitor status from any

|

||||

- [Redis](https://github.com/netdata/go.d.plugin/blob/master/modules/redis/README.md): Monitor status from any

|

||||

number of database instances by reading the server's response to the `INFO ALL` command.

|

||||

- [RethinkDB](/collectors/python.d.plugin/rethinkdbs/README.md): Collect database server and cluster statistics.

|

||||

- [Riak KV](/collectors/python.d.plugin/riakkv/README.md): Collect database stats from the `/stats` endpoint.

|

||||

- [Zookeeper](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/zookeeper/): Monitor application

|

||||

- [RethinkDB](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/rethinkdbs/README.md): Collect

|

||||

database server and cluster statistics.

|

||||

- [Riak KV](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/riakkv/README.md): Collect

|

||||

database stats from the `/stats` endpoint.

|

||||

- [Zookeeper](https://github.com/netdata/go.d.plugin/blob/master/modules/zookeeper/README.md): Monitor application

|

||||

health metrics reading the server's response to the `mntr` command.

|

||||

- [Memcached](/collectors/python.d.plugin/memcached/README.md): Collect memory-caching system performance metrics.

|

||||

- [Memcached](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/memcached/README.md): Collect

|

||||

memory-caching system performance metrics.

|

||||

|

||||

### Distributed computing

|

||||

|

||||

- [BOINC](/collectors/python.d.plugin/boinc/README.md): Monitor the total number of tasks, open tasks, and task

|

||||

- [BOINC](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/boinc/README.md): Monitor the total

|

||||

number of tasks, open tasks, and task

|

||||

states for the distributed computing client.

|

||||

- [Gearman](/collectors/python.d.plugin/gearman/README.md): Collect application summary (queued, running) and per-job

|

||||

- [Gearman](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/gearman/README.md): Collect

|

||||

application summary (queued, running) and per-job

|

||||

worker statistics (queued, idle, running).

|

||||

|

||||

### Email

|

||||

|

||||

- [Dovecot](/collectors/python.d.plugin/dovecot/README.md): Collect email server performance metrics by reading the

|

||||

- [Dovecot](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/dovecot/README.md): Collect email

|

||||

server performance metrics by reading the

|

||||

server's response to the `EXPORT global` command.

|

||||

- [EXIM](/collectors/python.d.plugin/exim/README.md): Uses the `exim` tool to monitor the queue length of a

|

||||

- [EXIM](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/exim/README.md): Uses the `exim` tool

|

||||

to monitor the queue length of a

|

||||

mail/message transfer agent (MTA).

|

||||

- [Postfix](/collectors/python.d.plugin/postfix/README.md): Uses the `postqueue` tool to monitor the queue length of a

|

||||

- [Postfix](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/postfix/README.md): Uses

|

||||

the `postqueue` tool to monitor the queue length of a

|

||||

mail/message transfer agent (MTA).

|

||||

|

||||

### Kubernetes

|

||||

|

||||

- [Kubelet](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/k8s_kubelet/): Monitor one or more

|

||||

- [Kubelet](https://github.com/netdata/go.d.plugin/blob/master/modules/k8s_kubelet/README.md): Monitor one or more

|

||||

instances of the Kubelet agent and collects metrics on number of pods/containers running, volume of Docker

|

||||

operations, and more.

|

||||

- [kube-proxy](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/k8s_kubeproxy/): Collect

|

||||

- [kube-proxy](https://github.com/netdata/go.d.plugin/blob/master/modules/k8s_kubeproxy/README.md): Collect

|

||||

metrics, such as syncing proxy rules and REST client requests, from one or more instances of `kube-proxy`.

|

||||

- [Service discovery](https://github.com/netdata/agent-service-discovery/): Find what services are running on a

|

||||

- [Service discovery](https://github.com/netdata/agent-service-discovery/README.md): Find what services are running on a

|

||||

cluster's pods, converts that into configuration files, and exports them so they can be monitored by Netdata.

|

||||

|

||||

### Logs

|

||||

|

||||

- [Fluentd](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/fluentd/): Gather application

|

||||

- [Fluentd](https://github.com/netdata/go.d.plugin/blob/master/modules/fluentd/README.md): Gather application

|

||||

plugins metrics from an endpoint provided by `in_monitor plugin`.

|

||||

- [Logstash](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/logstash/): Monitor JVM threads,

|

||||

- [Logstash](https://github.com/netdata/go.d.plugin/blob/master/modules/logstash/README.md): Monitor JVM threads,

|

||||

memory usage, garbage collection statistics, and more.

|

||||

- [OpenVPN status logs](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/openvpn_status_log): Parse

|

||||

- [OpenVPN status logs](https://github.com/netdata/go.d.plugin/blob/master/modules/openvpn_status_log/README.md): Parse

|

||||

server log files and provide summary (client, traffic) metrics.

|

||||

- [Squid web server logs](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/squidlog/): Tail Squid

|

||||

- [Squid web server logs](https://github.com/netdata/go.d.plugin/blob/master/modules/squidlog/README.md): Tail Squid

|

||||

access logs to return the volume of requests, types of requests, bandwidth, and much more.

|

||||

- [Web server logs (Go version for Apache,

|

||||

NGINX)](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/weblog/): Tail access logs and provide

|

||||

NGINX)](https://github.com/netdata/go.d.plugin/blob/master/modules/weblog/README.md/): Tail access logs and provide

|

||||

very detailed web server performance statistics. This module is able to parse 200k+ rows in less than half a second.

|

||||

- [Web server logs (Apache, NGINX)](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/weblog): Tail

|

||||

- [Web server logs (Apache, NGINX)](https://github.com/netdata/go.d.plugin/blob/master/modules/weblog/README.md): Tail

|

||||

access log

|

||||

file and collect web server/caching proxy metrics.

|

||||

|

||||

### Messaging

|

||||

|

||||

- [ActiveMQ](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/activemq/): Collect message broker

|

||||

- [ActiveMQ](https://github.com/netdata/go.d.plugin/blob/master/modules/activemq/README.md): Collect message broker

|

||||

queues and topics statistics using the ActiveMQ Console API.

|

||||

- [Beanstalk](/collectors/python.d.plugin/beanstalk/README.md): Collect server and tube-level statistics, such as CPU

|

||||

- [Beanstalk](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/beanstalk/README.md): Collect

|

||||

server and tube-level statistics, such as CPU

|

||||

usage, jobs rates, commands, and more.

|

||||

- [Pulsar](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/pulsar/): Collect summary,

|

||||

- [Pulsar](https://github.com/netdata/go.d.plugin/blob/master/modules/pulsar/README.md): Collect summary,

|

||||

namespaces, and topics performance statistics.

|

||||

- [RabbitMQ (Go)](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/rabbitmq/): Collect message

|

||||

- [RabbitMQ (Go)](https://github.com/netdata/go.d.plugin/blob/master/modules/rabbitmq/README.md): Collect message

|

||||

broker overview, system and per virtual host metrics.

|

||||

- [RabbitMQ (Python)](/collectors/python.d.plugin/rabbitmq/README.md): Collect message broker global and per virtual

|

||||

- [RabbitMQ (Python)](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/rabbitmq/README.md):

|

||||

Collect message broker global and per virtual

|

||||

host metrics.

|

||||

- [VerneMQ](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/vernemq/): Monitor MQTT broker

|

||||

- [VerneMQ](https://github.com/netdata/go.d.plugin/blob/master/modules/vernemq/README.md): Monitor MQTT broker

|

||||

health and performance metrics. It collects all available info for both MQTTv3 and v5 communication

|

||||

|

||||

### Network

|

||||

|

||||

- [Bind 9](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/bind/): Collect nameserver summary

|

||||

- [Bind 9](https://github.com/netdata/go.d.plugin/blob/master/modules/bind/README.md): Collect nameserver summary

|

||||

performance statistics via a web interface (`statistics-channels` feature).

|

||||

- [Chrony](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/chrony): Monitor the precision and

|

||||

- [Chrony](https://github.com/netdata/go.d.plugin/blob/master/modules/chrony/README.md): Monitor the precision and

|

||||

statistics of a local `chronyd` server.

|

||||

- [CoreDNS](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/coredns/): Measure DNS query round

|

||||

- [CoreDNS](https://github.com/netdata/go.d.plugin/blob/master/modules/coredns/README.md): Measure DNS query round

|

||||

trip time.

|

||||

- [Dnsmasq](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/dnsmasq_dhcp/): Automatically

|

||||

- [Dnsmasq](https://github.com/netdata/go.d.plugin/blob/master/modules/dnsmasq_dhcp/README.md): Automatically

|

||||

detects all configured `Dnsmasq` DHCP ranges and Monitor their utilization.

|

||||

- [DNSdist](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/dnsdist/): Collect

|

||||

- [DNSdist](https://github.com/netdata/go.d.plugin/blob/master/modules/dnsdist/README.md): Collect

|

||||

load-balancer performance and health metrics.

|

||||

- [Dnsmasq DNS Forwarder](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/dnsmasq/): Gather

|

||||

- [Dnsmasq DNS Forwarder](https://github.com/netdata/go.d.plugin/blob/master/modules/dnsmasq/README.md): Gather

|

||||

queries, entries, operations, and events for the lightweight DNS forwarder.

|

||||

- [DNS Query Time](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/dnsquery/): Monitor the round

|

||||

- [DNS Query Time](https://github.com/netdata/go.d.plugin/blob/master/modules/dnsquery/README.md): Monitor the round

|

||||

trip time for DNS queries in milliseconds.

|

||||

- [Freeradius](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/freeradius/): Collect

|

||||

- [Freeradius](https://github.com/netdata/go.d.plugin/blob/master/modules/freeradius/README.md): Collect

|

||||

server authentication and accounting statistics from the `status server`.

|

||||

- [Libreswan](/collectors/charts.d.plugin/libreswan/README.md): Collect bytes-in, bytes-out, and uptime metrics.

|

||||

- [Icecast](/collectors/python.d.plugin/icecast/README.md): Monitor the number of listeners for active sources.

|

||||

- [ISC Bind (RDNC)](/collectors/python.d.plugin/bind_rndc/README.md): Collect nameserver summary performance

|

||||

- [Libreswan](https://github.com/netdata/netdata/blob/master/collectors/charts.d.plugin/libreswan/README.md): Collect

|

||||

bytes-in, bytes-out, and uptime metrics.

|

||||

- [Icecast](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/icecast/README.md): Monitor the

|

||||

number of listeners for active sources.

|

||||

- [ISC Bind (RDNC)](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/bind_rndc/README.md):

|

||||

Collect nameserver summary performance

|

||||

statistics using the `rndc` tool.

|

||||

- [ISC DHCP](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/isc_dhcpd): Reads a

|

||||

- [ISC DHCP](https://github.com/netdata/go.d.plugin/blob/master/modules/isc_dhcpd/README.md): Reads a

|

||||

`dhcpd.leases` file and collects metrics on total active leases, pool active leases, and pool utilization.

|

||||

- [OpenLDAP](/collectors/python.d.plugin/openldap/README.md): Provides statistics information from the OpenLDAP

|

||||

- [OpenLDAP](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/openldap/README.md): Provides

|

||||

statistics information from the OpenLDAP

|

||||

(`slapd`) server.

|

||||

- [NSD](/collectors/python.d.plugin/nsd/README.md): Monitor nameserver performance metrics using the `nsd-control`

|

||||

- [NSD](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/nsd/README.md): Monitor nameserver

|

||||

performance metrics using the `nsd-control`

|

||||

tool.

|

||||

- [NTP daemon](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/ntpd): Monitor the system variables

|

||||

of the local `ntpd` daemon (optionally including variables of the polled peers) using the NTP Control Message Protocol

|

||||

via a UDP socket.

|

||||

- [OpenSIPS](/collectors/charts.d.plugin/opensips/README.md): Collect server health and performance metrics using the

|

||||

- [OpenSIPS](https://github.com/netdata/netdata/blob/master/collectors/charts.d.plugin/opensips/README.md): Collect

|

||||

server health and performance metrics using the

|

||||

`opensipsctl` tool.

|

||||

- [OpenVPN](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/openvpn/): Gather server summary

|

||||

- [OpenVPN](https://github.com/netdata/go.d.plugin/blob/master/modules/openvpn/README.md): Gather server summary

|

||||

(client, traffic) and per user metrics (traffic, connection time) stats using `management-interface`.

|

||||

- [Pi-hole](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/pihole/): Monitor basic (DNS

|

||||

- [Pi-hole](https://github.com/netdata/go.d.plugin/blob/master/modules/pihole/README.md): Monitor basic (DNS

|

||||

queries, clients, blocklist) and extended (top clients, top permitted, and blocked domains) statistics using the PHP

|

||||

API.

|

||||

- [PowerDNS Authoritative Server](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/powerdns):

|

||||

- [PowerDNS Authoritative Server](https://github.com/netdata/go.d.plugin/blob/master/modules/powerdns/README.md):

|

||||

Monitor one or more instances of the nameserver software to collect questions, events, and latency metrics.

|

||||

- [PowerDNS Recursor](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/powerdns_recursor):

|

||||

- [PowerDNS Recursor](https://github.com/netdata/go.d.plugin/blob/master/modules/powerdns/README.md_recursor):

|

||||

Gather incoming/outgoing questions, drops, timeouts, and cache usage from any number of DNS recursor instances.

|

||||

- [RetroShare](/collectors/python.d.plugin/retroshare/README.md): Monitor application bandwidth, peers, and DHT

|

||||

- [RetroShare](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/retroshare/README.md): Monitor

|

||||

application bandwidth, peers, and DHT

|

||||

metrics.

|

||||

- [Tor](/collectors/python.d.plugin/tor/README.md): Capture traffic usage statistics using the Tor control port.

|

||||

- [Unbound](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/unbound/): Collect DNS resolver

|

||||

- [Tor](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/tor/README.md): Capture traffic usage

|

||||

statistics using the Tor control port.

|

||||

- [Unbound](https://github.com/netdata/go.d.plugin/blob/master/modules/unbound/README.md): Collect DNS resolver

|

||||

summary and extended system and per thread metrics via the `remote-control` interface.

|

||||

|

||||

### Provisioning

|

||||

|

||||

- [Puppet](/collectors/python.d.plugin/puppet/README.md): Monitor the status of Puppet Server and Puppet DB.

|

||||

- [Puppet](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/puppet/README.md): Monitor the

|

||||

status of Puppet Server and Puppet DB.

|

||||

|

||||

### Remote devices

|

||||

|

||||

- [AM2320](/collectors/python.d.plugin/am2320/README.md): Monitor sensor temperature and humidity.

|

||||

- [Access point](/collectors/charts.d.plugin/ap/README.md): Monitor client, traffic and signal metrics using the `aw`

|

||||

- [AM2320](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/am2320/README.md): Monitor sensor

|

||||

temperature and humidity.

|

||||

- [Access point](https://github.com/netdata/netdata/blob/master/collectors/charts.d.plugin/ap/README.md): Monitor

|

||||

client, traffic and signal metrics using the `aw`

|

||||

tool.

|

||||

- [APC UPS](/collectors/charts.d.plugin/apcupsd/README.md): Capture status information using the `apcaccess` tool.

|

||||

- [Energi Core](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/energid): Monitor

|

||||

- [APC UPS](https://github.com/netdata/netdata/blob/master/collectors/charts.d.plugin/apcupsd/README.md): Capture status

|

||||

information using the `apcaccess` tool.

|

||||

- [Energi Core](https://github.com/netdata/go.d.plugin/blob/master/modules/energid/README.md): Monitor

|

||||

blockchain indexes, memory usage, network usage, and transactions of wallet instances.

|

||||

- [UPS/PDU](/collectors/charts.d.plugin/nut/README.md): Read the status of UPS/PDU devices using the `upsc` tool.

|

||||

- [SNMP devices](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/snmp): Gather data using the SNMP

|

||||

- [UPS/PDU](https://github.com/netdata/netdata/blob/master/collectors/charts.d.plugin/nut/README.md): Read the status of

|

||||

UPS/PDU devices using the `upsc` tool.

|

||||

- [SNMP devices](https://github.com/netdata/go.d.plugin/blob/master/modules/snmp/README.md): Gather data using the SNMP

|

||||

protocol.

|

||||

- [1-Wire sensors](/collectors/python.d.plugin/w1sensor/README.md): Monitor sensor temperature.

|

||||

- [1-Wire sensors](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/w1sensor/README.md):

|

||||

Monitor sensor temperature.

|

||||

|

||||

### Search

|

||||

|

||||

- [Elasticsearch](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/elasticsearch): Collect

|

||||

- [Elasticsearch](https://github.com/netdata/go.d.plugin/blob/master/modules/elasticsearch/README.md): Collect

|

||||

dozens of metrics on search engine performance from local nodes and local indices. Includes cluster health and

|

||||

statistics.

|

||||

- [Solr](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/solr/): Collect application search

|

||||

- [Solr](https://github.com/netdata/go.d.plugin/blob/master/modules/solr/README.md): Collect application search

|

||||

requests, search errors, update requests, and update errors statistics.

|

||||

|

||||

### Storage

|

||||

|

||||

- [Ceph](/collectors/python.d.plugin/ceph/README.md): Monitor the Ceph cluster usage and server data consumption.

|

||||

- [HDFS](https://learn.netdata.cloud/docs/agent/collectors/go.d.plugin/modules/hdfs/): Monitor health and performance

|

||||

- [Ceph](https://github.com/netdata/netdata/blob/master/collectors/python.d.plugin/ceph/README.md): Monitor the Ceph

|

||||

cluster usage and server data consumption.

|

||||